Can We Read Speech Beyond the Lips? Rethinking RoI Selection for Deep Visual Speech Recognition

Published in IEEE F&G, 2020

Recommended citation: Zhang, Y., Yang, S., Xiao, J., Shan, S. and Chen, X., 2020. Can We Read Speech Beyond the Lips? Rethinking RoI Selection for Deep Visual Speech Recognition. International Conference on Automatic Face and Gesture Recognition, 2020 (oral). https://arxiv.org/pdf/2003.03206.pdf

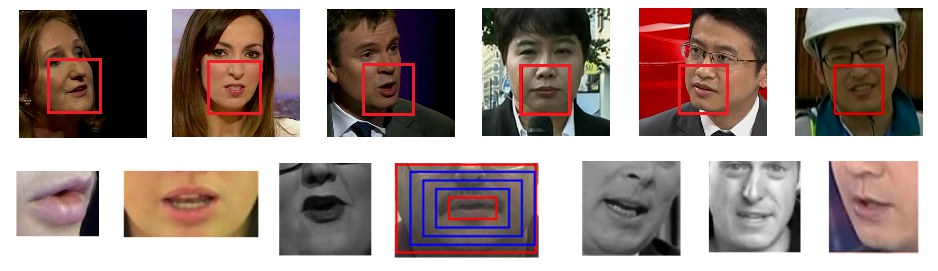

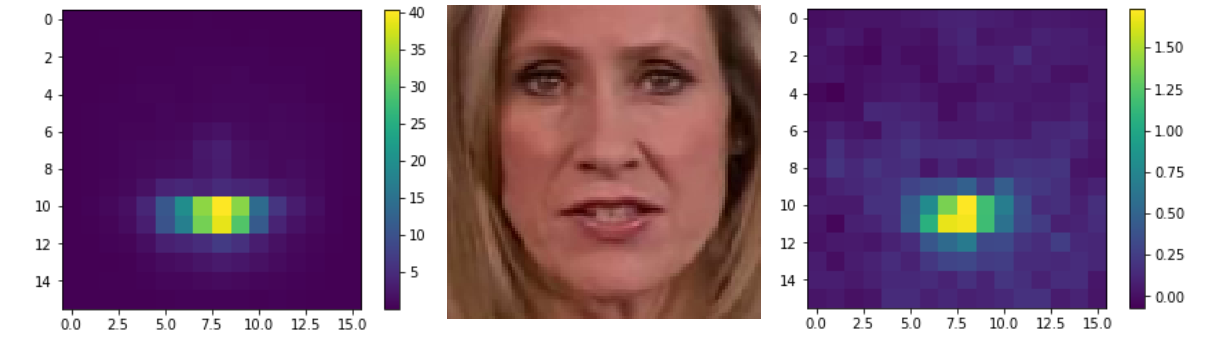

Abstract: Recent advances in deep learning have heightened interest among researchers in the field of visual speech recognition (VSR). Currently, most existing methods equate VSR with automatic lip reading, which attempts to recognise speech by analysing lip motion. However, human experience and psychological studies suggest that we do not always fix our gaze at each other’s lips during a face-to-face conversation, but rather scan the whole face repetitively. This inspires us to revisit a fundamental yet somehow overlooked problem: can VSR models benefit from reading extraoral facial regions, i.e. beyond the lips? In this paper, we perform a comprehensive study to evaluate the effects of different facial regions with state-of-the-art VSR models, including the mouth, the whole face, the upper face, and even the cheeks. Experiments are conducted on both word-level and sentence-level benchmarks with different characteristics. We find that despite the complex variations of the data, incorporating information from extraoral facial regions, even the upper face, consistently benefits VSR performance. Furthermore, we introduce a simple yet effective method based on Cutout to learn more discriminative features for face-based VSR, hoping to maximise the utility of information encoded in different facial regions. Our experiments show obvious improvements over existing state-of-the-art methods that use only the lip region as inputs, a result we believe would probably provide the VSR community with some new and exciting insights.